i see bots

In the future, you won't think this is so weird

1-Wire

“1-Wire” is the name for a device bus protocol designed to be inexpensive, easy to connect, and easy to interface with.

The name “1-Wire” is a marketing take on the fact that data is conducted over a single wire. You still need a ground connection, but “2-Wire” presumably didn’t sound as interesting. In this configuration, devices on the bus operate in “parasitic” mode, storing power accumulated during bus transitions. In reality, I found it more reliable to operate in “3-Wire” mode, where you send some power along a third wire. The specification was originally introduced by Dallas Semiconductor, which was subsumed into Maxim via acquisition.

See Maxim’s list of devices and bus infrastructure. The DS18S20 temperature sensors I’m most familiar with look like your average transistor (image from here):

A given implementation of a 1-Wire bus, which Maxim refers to as a “microlan”, is composed of a bus controller or “master”, the physical wire segments of the network itself, and the “slave” 1-Wire devices attached to those segments. The bus controller can be: a dedicated hardware device, which connects to the 1-Wire bus on end and, say, an RS-232 port on the other, and implements the requisite bus protocol. Or, you can directly connect the bus to a PC’s serial port via a simple circuit, and work the bus in software on the PC.

In theory, any number of 1-Wire devices can be attached to a 1-Wire bus, since each device has a 64-bit unique identifier burned into it, which the controller/master uses to track the slave devices. However, there are important topological considerations to take into account, given the electrical characteristics of the wire segment of the network, which, due to impedence “weights”, can introduce signal reflections. Maxim covers these topics in a design note (here), to help explain which network topologies to avoid (such as “star” networks), and which ones to favor.

I started using 1-Wire nearly 10 years ago, because I found an inexpensive weather station from AAG based on 1-Wire devices. I mounted the weather station on the roof and ran a couple (ok, 3) wires down to the basement, which is where a Midon Design bus controller (at the time, “TEMP05”, but he’s up to “TEMP08” now!), connected via RS-232 to an always-on PC, was located. On the PC, I ran Homeseer: a script would pull ASCII weather data generated by the TEMP05 and log it, and I had other scripts to graph weather trends. The weather station used a 1-Wire temperature sensor for measuring outside temperature, and other 1-Wire devices to transmit wind speed and direction. That setup worked for quite a few years, until our Pacific Northwest weather did it in. I’ve since graduated to a David Instruments weather station - more on that later.

Along the way, I also hooked up a couple of DS18S20 temperature sensors, mostly out of curiousity, and it was through these experiments that I learned a bit about best practices - for my house, anyway - for implementing a reliable 1-Wire bus. I wanted to use, where possible, the existing CAT-5 home-run wiring I had installed in the house… but given that I had a single bus controller - the TEMP05 - I knew that a star configuration would not work. So I had to daisy-chain together the network segments, including the long run to the weather station on the roof. I found that this configuration didn’t work reliably under parasitic power, but fortunately, the TEMP05 device makes it easy to provide the +5V Vdd needed to power the microlan.

This helped, but it still wasn’t rock-solid reliable. In most cases, it didn’t matter, given that I could poll for temperature readings every minute or so and average the results. Adding reflection-dampening resisters (per Midon Design advice) helped some. But I didn’t like the idea that I had to custom-tune the network every time I changed or added devices. A more robust and easy-to-get-right solution seemed to require multiple controllers, perhaps one per physical network leg?

Along that line of thinking, I tried out a relatively inexpensive ($29) bus controller, the “CK0110” four-channel kit from Carl’s Electronics, which seemed to be able to support 4 microlans. That helped.

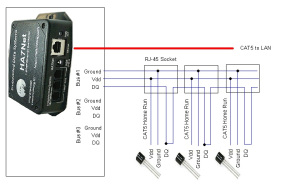

At some point, I stumbled upon Embedded Systems’ TCP/IP-based bus controller, the HA7Net, which does this: you connect up to three microlans to it, add power, and patch it into your LAN. It looks like this:

You can then point a browser at it and query the devices that it is managing on any of the three microlans. Furthermore, you can connect to it via a telnet session, which is how I integrated it into Homeseer (via the Ultra1Wire plugin, a newer version of which can be found here). I liked this approach because it meant one less RS-232 serial port to deal with (although this turns out to have been a temporary phenomena, given the approach I took to integrating with A/V receiver and monitor - see this post for the details).

The scenarios supported by these 1-Wire temperature sensors had expanded at this point to include:

- Turn on bathroom fans if someone is taking a shower, run for 10 minutes after shower ends (this one took a while to pull off, but now it seems perfectly commonplace)

- Turn on Kitchen fan if high temperature detected over the stovetop

- If temperature in computer rack gets too high, warn

(See this list for a complete list of implemented and planned scenarios)

Those scenarios required 5 or 6 sensors, which means that, with the HA7Net’s support for only three microlans, I would have to daisy-chain a few of the sensors, all of which were hanging off the far ends of CAT-5 homeruns, in the various corners of the house. The approach I took was to wire up a daisy-chain junction box, located in the basement. Here’s a photo:

At each device, one conductor brings the DQ signal line “in” to the device, and another conductor takes it “out” to the next device down the line. All devices are wired to the same power and ground conductors. The junction box down in the basement contains RJ-45 sockets into which patch cables, each one representing a device, are plugged in. One end of the chain goes to the HA7Net.

At each device, one conductor brings the DQ signal line “in” to the device, and another conductor takes it “out” to the next device down the line. All devices are wired to the same power and ground conductors. The junction box down in the basement contains RJ-45 sockets into which patch cables, each one representing a device, are plugged in. One end of the chain goes to the HA7Net.

The “Uplink” cable connects to the HA7Net. The patch cables connected to sockets labeled “1”, “2”, and “3” connect to various 1-Wire temperature sensors, via the house patch panel.

Here’s a schematic:

This is the setup I’m using today. It’s reliable and easy to work with, and the availability of a well-built Homeseer plugin (the Ultra1Wire mentioned above) makes system integration very easy.

If I were starting over now, I would probably end up with a similar arrangement, despite the proliferation of cheap TCP/IP-enabled hacker boards. It’s hard to beat the <$5 price for a sensor-cum-network adapter. And the HA7Net bus master, while not cheap, is reasonably priced given that it’s TCP/IP-enabled and, since it supports three microlans, effectively means that you’d only need one in your house. (You could also substitute an Ardruino, using the 1-Wire library). If I were to try to build a network of temperature sensors that, for instance, is based on TCP/IP instead of 1-Wire, the per-device price would be much higher - for instance, the Arduino ethernet shield goes for $39-$45.

This would be a good point to make a general point about the world of devices or the so-called “Internet of Things” (see overview post)… while TCP/IP will be the dominant protocol at the high-level, since that’s how our top-level controlling computers are connected, down at the leaf nodes, where cost and size factors are important, alternate special-purpose protocols, such as 1-Wire, will be in abundance. Other examples include: the ZigBee wireless protocol, with low-power boards starting at $23, Bluetooth for $45, and the “Nordic” nRF24L01+ series, starting at $34… while some of these prices approach that of, say, the TCP/IP-based WiFi protocol, their physical size and complexity is much less so in comparison.

Even as the cost of TCP/IP-enabling devices drops in price, the lower-boundaries (size, cost) will also continue to drop. I think the thing to focus on is that 1-Wire devices are addressable, given their unique IDs, and ability to connect to a bus that can be bridged (via devices like the HA7Net) to the TCP/IP and therefore the Internet. Thus: not every connected device in the world will have a TCP/IP address, but you’ll still be able to talk to it, learn its state, and issue commands to it. That’s OK, I think.

NOTES

- Carl’s Electronics offers an inexpensive RS-232-based 4-port 1-Wire bus controller kit.

- Quozl (http://quozl.us.netrek.org) is an interesting fellow and offers an interesting site, describing a number of open source projects and hacks. He supplied the code for abovementioned 4-port 1-Wire bus master.

- Arduino support for 1-Wire: background and library: http://www.arduino.cc/playground/Learning/OneWire

- More background on 1-Wire, including links to software for directly driving the 1-Wire bus from a PC: http://www.arunet.co.uk/tkboyd/e1didx.htm.

- Hobby Boards (http://www.hobby-boards.com/catalog/index.php) offers a range of 1-Wire devices and kits, including: temperature, humidity, relays, displays.

- I used - for the first time - “TinyCAD“, an excellent free schematic drawing tool, to draw the 1-Wire schematic above. I found it very easy to use.

Simple

“Simplicity is the ultimate sophistication. “ - Leonardo da Vinci (here and here)

I couldn’t pass up the opportunity to use a quote from L. DaVinci as an introduction to this blog post. But it’s really does set the right tone for a topic that’s been creeping up behind me on pad-feet, creaking the floor boards and occasionally breathing a little loudly. It’s always been there, but now it’s time to write about it.

A main theme for home automation should be, I think, to add value - in terms of convenience, security, energy efficiency, etc - while simplifying. Put another way, if you bring a new scenario to bear, don’t do it in such a way as to add complexity. Just add value, not complexity. Hold the complexity! Or make something simpler than it was.

It’s hard to make something simple, or simpler, while adding cool new functionality. Another quote is due:

To paraphrase Einstein (“simply” because I can):

“Make things as simple as possible, but not simpler” - Einstein (here)

Some questions to ponder when considering adding a new scenario:

- How “natural” does the new scenario seem to the average user?

- How much “training”, if any, is required?

- How much of what the user already knows can be used to leverage the new scenario?

- How robust is the scenario in the face of unexpected user actions or input, perhaps using existing controls or devices? Or other failures (such as power failures, loss of internet connectivity, etc)?

- How are existing scenarios changed?

- What additional “workloads” does the new scenario introduced for the user?

For example, some considerations when installing light switches that can be controlled via Home Automation software:

- Do the switches operate like ordinary switches? Will users just “know” how to use them, because they operate just like other switches around the house?

- What “value” are you introducing with these fancy new switches… is it for energy conservation? Security? What plan will you put in place to avoid confusing or frustrating users, or, worst-case, leave them tripping in the dark, feeling the walls for the switch for the light that just turned off for some reason?

- If the HA software is programmed to turn the lights off under certain conditions, how will users react? Can this automation be over-ridden?

- If the HA software is programmed to turn the lights on under certain conditions, will it also have a plan to turn them off (to conserve power or reduce user workload)?

Consider a (newer) Z-Wave light switch. One of the things I like about Z-Wave is that my controller software (Homeseer) can quickly detect when the user turns the switch on or off (see note 1). This means that I can fire events based on a change in the state of the switch. So I can implement a timer for the switch: if the user turns on the light, I want it to be turned off in, say, 20 minutes, because people in my house don’t know how to turn off lights (or so it seems). So far, so good. But, if someone turns the light on and then off 5 minutes later (that would be me) and THEN turns it back on a minute later, that 20 minute timer needs to be reset. It takes some scripting to make this happen (to clear out any pending “off” events for that switch).

This is an example of using some extra cycles (in the form of additional scripting) to hide the complexity from the user and build in some robustness. It’s also an example of the importance of 2-way communication between devices. It’s hard to create a smart scenario where unpredictable humans are involved if the controls involved aren’t able to communicate bi-directionally. Early Z-Wave devices, and earlier technologies, such as X-10, could not do a good job of keeping the HA software in the loop when the state of the device changed. If the user could turned on a light, the HA software might not know it, or might not know it for several minutes. If you were interested in adding value with these switches, it had to be done carefully to avoid frustrating the user.

Another example: what happens when you “automate” the A/V stack in your living room employing the usual approach of programming a fancy universal remote control (see note 2)? If my household was any indication, you’re introducing a new world of hurt. It seems that the usual approach leaves out the possibility that the user might have the audacity to actually touch the equipment, or perform a step in some order other than what’s been prescribed. This is usually because the typical remote control communicates in 1 direction only: it talks, but does not listen. It has no clue as to whether those commands have been correctly received, or whether it’s own model for the state of the components it thinks it’s controlling is accurate (see note 3). If the hapless user happens to, say, turn on the DVD player by pushing the power button on the player, in order to, say, insert a DVD and then picks up the universal remote to “Play DVD”, confusion results. The hapless universal remote, not knowing that the DVD player is already on will likely send a “power toggle” remote control signal to the DVD player, which will promptly turn if off. The remote is none the wiser. The user, though, is sure that something is screwed up. In an ideal world, the remote and the controlled components would talk to each other. In a slightly-less but still workable world, all component manufacturers would implement discrete remote codes for “power” commands and the like - not toggle commands.

In our house, we’ve moved beyond universal remote controls. At some point, it struck me as to how much of a compromise they represented, in terms of the user experience. Instead of using the remote that came with the component - DVD player, game console, tuner, etc - we were trying to shoe-horn all of the specific functions into a single, large, oddly-shapped remote that also tried to control the components using a 1-way protocol.

It slowly dawned on me that I could take a contrarian approach: if you want to watch a DVD, why not pick up the DVD remote and just use that remote for everything? That remote obviously already has a power button. And, the kicker is, it also has volume up/down buttons - presumably because it can control receivers from the same manufacturer or be pressed into service as a universal remote. So everything one needs to watch a DVD - power, volume, transport, and other DVD-specific functions - are represented as buttons on that one remote. So why not design a DVD scenario around that DVD remote? Similarly, the game console remote had all the buttons one needs to use it as well as a set of volume and mute buttons. Well, this is odd. Let’s go with it.

The approach I took was to reliably and in real-time mirror as software variables in the HA software (virtual devices in Homeseer) the power state of each component. If the DVD player was turned on, I needed the corresponding “DVD Power” virtual device in Homeseer to change instantly to “On”. Ditto the Xbox and any other source components. This was important to get right in order to handle the situation where a pesky human touches something in the A/V stack to, say, switch out a DVD. It took a while to figure out how to do this. For now, I’ll summarize it as follows:

- DVD player: my particular player, a Sony BluRay player, sports a USB port on the front. When the player is powered on, that port is powered up. I built a simple circuit to sense when +5 volt signal is present at that port and change the state of the “DVD Power” virtual device in Homeseer to “On”. When the +5 volt signal is removed, the virtual device state is set to “Off”.

- XBox: took a bit of work (and voided the warranty!). The Xbox also has USB ports, but these remain powered on even when the Xbox is “off”. Foiled! So the trick I used for the DVD player wouldn’t work here. I did, however, find a way to tap into the wires leading to the fans, which are fed a varying level of voltage when the console is on (presumably depending on how hot the console is). I built a simple circuit to detect when any positive voltage is present on the fans, and update a “Xbox Power” virtual device in Homeseer accordingly.

Once I had power state variables I could rely on, I was on the way to implementing this “pick up just the remote for the source you want” scenario. If you want to watch a DVD, pick up that remote and hit the power button. The DVD player will turn on, and Homeseer will take note of it: it will run some additional scripts to turn on the Receiver and the Monitor (which entailed making use of the RS-232-based command sets offered by those two components). Enabling the use of the DVD remote’s volume up/down buttons took more work, involving an PC-based IR receiver/transmitter (the USB UIRT).

I’ll post a more detailed explanation of all this in a follow-up post. But the bottom line is that it’s all working now. The existing controls - the remotes, the buttons on the components themselves - still work as expected, and in fact complement the new scenario. The universal remote control is packed away in the closet, beeping now and then as its battery fades.

A final example involves a scenario inspired by a contractor who was working in our bathroom. He kept saying, “Get it out! Get it all out!!”, when we talked about sizing the exhaust fan. He really (really) believed in the importance of clearly the bathroom quickly of steam from the shower. It not, you run the risk of mildew or rot and the resulting structural problems. Always run the fan while showering, and then for 10-15 minutes afterwards.

In my house, however, few other occupants really took this message to heart. If they remembered to turn the fan on, it was typically after the shower was over and the walls were already dripping with condensation. And no one remembered to turn it off after the required 15 minutes, thus triggering complaints from me about noise and wasted electricity.

I moved the fan to a Z-Wave controlled circuit, and scripted it so that it would turn off automatically after 15 minutes. That’s a good start, but doesn’t solve the problem of getting people to turn the fan on during the shower, not after it’s over and the place is already fogged up.

What would the average user expect when asked to describe a “automatic shower fan”? I’d say this: the fan should turn on automatically when someone starts the shower, and not turn off until 15 minutes after the shower ends. That’s a great goal statement. But, as you can imagine, from an implementation perspective, it seemed like a downright gnarly problem to solve. But it was solved, albeit with some extra hardware (Ha7NET hub), 1-Wire devices, and more complex-than-usual scripting). The “simplest solution” has been working well for a couple of years now. More on that later.

In closing: I like ThingM‘s motto: “Smart devices make things simple”. I think that’s a good criteria to evaluate when deciding whether your ‘bots and automation plans are actually adding value.

Notes:

- If I’ve set it up correctly. Due to various issues with various versions of Homeseer, it’s not a given that this is always the case. More on this later.

- I’ve owned two Logitech Harmony Universal Remotes, but, alas, I can’t recommend them. Both have ended up being disappointed for various reasons… the programming experience is awful, but it pales to the issues caused by the poor product build quality, hardware flakiness, and support. The most recent model I owned is the Harmony 890, which can send signals via IR or RF. If you’re considering one of these, please take a look at the reviews on Amazon first. On paper, it showed promise…

- Too often, component manufacturers take the lazy approach to the power button on the remote, by implementing it was a toggle. Pushing the “power” button on the average remote often sends a single command which the component interprets as this: “if you’re on, turn off; if you’re off, turn on”. This means that the average universal remote must remember if a component is on or off in order to implement a scenario like this: “the user is currently watching a DVD but would now like to play a game on the game console… so, turn off the DVD player and turn on the game console”. If the remote thinks that the game console is already on, it will skip sending a “toggle power” remote code. If, on the other hand, the game console has discrete “power on” and “power off” codes, the remote can confidently send “power on”, which do nothing if the game console is already on. I think that “discrete codes” are one of the marks of a component/device that’s designed to be system-integrated.

The Internet of Things

When I started this “iseetbots” blog, I blithely assumed that it was self-evident what terms like ‘Bot’ or ‘Connected Device’ mean.

Similarly, every time I heard the term “Internet of Things’, I blithely assumed I knew what that term meant (and that my interpretation matched everyone else’s🙂.

Boy, was I wrong. So here’s an informal summary of a quick look-see into the “Internet of Things”. My first, and probably not my last.

As a meme, “Internet of Things” (IoT) has hit the big time. There are lots of blog posts, dedicated media site coverage, top-ten lists, a few conferences, distinguished research labs are hiring researchers, a council, a consortium, analyst coverage, a couple of startups, and – w a I t f o r i t – a Wikipedia entry.

OK, so IoT is here. What is it, then?

The upshot of my quick and non-scientific investigation is that it for many people, at this point in time, IoT describes the emerging mesh of self-identifying objects that helps keep track of things for us (and, in a dystopian world, helps our governments keep track of us). In the short-term, think RFID.

The CASAGRAS (“Coordination And Support Action for Global RFID-related Activities and Standardisation”) council (in the EU) discusses various definitions, including one offered by an SAP Researcher: “A world where physical objects are seamlessly integrated into the information network, and where the physical objects can become active participants in business processes.”

Businesses, especially those with inventory or supplies, etc, need to stay abreast of this trend. Now! The “Internetome” conference announced itself with this warning: “The Internet of Things is here now, and it’s going to get big and quickly…The earlier your organisation gets to grips with the opportunities, as soon as you can identify and plot a journey over the hurdles and around the pitfalls… the sooner you can innovate to maintain and grab competitive advantage.”

IBM seems to have made IoT an important aspect of their “Smarter Planet” initiative / strategy / other, the need for which they motivate like so:

“At IBM, we mean that intelligence is being infused into the systems and processes that make the world work—into things no one would recognize as computers: cars, appliances, roadways, power grids, clothes, even natural systems such as agriculture and waterways.”

A key capability revolves around all that data that’s being generated by all of those devices:

Data is being captured today as never before. It reveals everything from large and systemic patterns—of global markets, workflows, national infrastructures and natural systems—to the location, temperature, security and condition of every item in a global supply chain. And then there’s the growing torrent of information from billions of individuals using social media. They are customers, citizens, students and patients. They are telling us what they think, what they like and want, and what they’re witnessing. As important, all this data is far more real-time than ever before.

And here’s the key point: data by itself isn’t useful. Over the past year we have validated what we believed would be true—and that is, the most important aspect of smarter systems is data—and, more specifically, the actionable insights that the data can reveal.

Anyway, “Smarter Planet” is at a… planet-like scale that only IBM could muster – the SmarterPlanet website is huge and the range of IBM products and services huger. It seems they’ve wrapped their entire business around this concept. More on this later.

+++

The writer Bruce Sterling invented the term “spime” to describe a class of devices with these characteristics:

- Small, inexpensive means of remotely and uniquely identifying objects over short ranges; in other words, radio-frequency identification.

- A mechanism to precisely locate something on Earth, such as a global-positioning system.

- A way to mine large amounts of data for things that match some given criteria, like internet search engines.

- Tools to virtually construct nearly any kind of object; computer-aided design.

- Ways to rapidly prototype virtual objects into real ones. Sophisticated, automated fabrication of a specification for an object, through “three-dimensional printers.”

- “Cradle-to-cradle” life-spans for objects. Cheap, effective recycling.

(from Wikipedia)

This definition covers a lot of ground, and specifies aspects of not just the “things” in the IoT but also the “means” for those things (tools for design and rapid prototyping and fabrication – think RepRap and the like) and methods for dealing with the expected rivers of data coming from them. On that last point: the “OpenSpime” developer network (appears to be defunct) was created to “implement an open protocol for an open internet of things”, based on an extension of the XMPP messaging protocol. (I wonder what overlap, if any, there might be with xAP?).

WideTag has adopted this spime-centric view of the IoT, including a characterization into “Category 0” and “Category 1” spimes.

+++

IoT has some people worried, and may in fact cause a run on tin foil. The Internet of Things council casts the challenge of the age as “transcending the short-term opposition between social innovation and security by finding a way to combine these two necessities in a broader common perspective” and “It holds dangers, but it also holds promises” and “defensive, driven by design principles of control and fear and has in the past six years not been able to create much enthusiasm, on the contrary, it has sparked lots of defensive debates on transparency, privacy and fear mongering”. Besides wrapping your passport in tin-foil, perhaps merchants should proactively ‘blow the fuse’ on RFID tags when the sale is consummated, thus rendering the tag useless for future tracking?

ReadWriteWeb describes a possible future where countless individual pieces of information from your environment is recorded, transmitted, and fused into a larger, all-knowing panorama of one’s activities: “imagine a future where all objects are “social” data-collectors who can report their use, their history, their location, etc. Now imagine the government or corporations accessing that data and taking action based on what the objects’ data tells them”.

As an example of what could be coming… how many optical gyroscopes can fit on the head of a pin?

+++

This RFID focus is a narrow, short-term view of IoT, based on my informal research. The longer-term view is harder to define: “Our future with the Internet of Things is still quite unclear. But initial glimpses of it can be seen through applications of RFID technology” (The Internet of Things Council).

So… a number of folks are thinking about a world where a critical mass of everyday things are self-identifying and perhaps can even sustain a conversation with you or your electronic delegate. In that future, our relationship with those things will be significantly different. Given that Twitter’s 140 character limit has set the bar here, it might not take much for an object to pass itself off as being part of a conversation of some kind, even if it’s being ‘followed’ only by other objects. We are already seeing Tweeting houses, buoys, and what not.

I think Social Node expresses it best:

“Over the next 5 years the web will rapidly spread into the world. This will not necessarily require the abundant, cheap sensors typically referenced in conversations about The Internet of Things (which is more about direct object-to-object communication). Instead, it’s more likely that prosumers will enrich rich virtual mirror worlds and then access them via geo-coordinates at home or on the go. “

Which is what these three companies are enabling: the association of social content – photos, videos, etc - with specific, physical objects, through tags that you attach or otherwise map to the objects:

- StickyBits: “A fun and social way to attach digital content to real world objects”, by mapping a bar code on something – a business car, a cereal box, a car, etc - to your content - a video, document, photo, etc. Someone comes along and scans the code, and ‘retrieves’ what you’ve left there.

- Tales of Things: Proclaiming, “It’s a memory thing”, you can connect “anything with any media, anywhere”. Appears similar to StickyBits except using QR codes that you print on your own.

- Itizen: “a place to tell, share, & follow the life stories of interesting things”… appears similar to StickyBits, except with custom tags that you buy or print on your own.

- pachube: “Store, share & discover realtime sensor, energy and environment data from objects, devices & buildings around the world. Pachube is a convenient, secure & scalable platform that helps you connect to & build the ‘internet of things.” Cool mashup mapping devices from all over the world.

The “ELEARNSPACE” blog gushes about how this eventuality – social objects - will likely have a greater impact than social media (take that, Zuckerberg!):

“As more devices connect to the internet – cars, home security systems, utility monitoring – and as more objects include RFID tags, the physical world begins to merge with the digital world. I can search for my car keys the same way I search for a research paper. Social media is an overlay of socialization on top of our physical worlds. The internet of things is an integration of physical and virtual worlds, permitting the most desirable elements of each to exist in the other.”

Social Node points out that the resultant river of data will be a rich target for monetization:

“There is tremendous business, consumer, and social demand in place to incentivize these flows. This pull force is getting stronger as we collectively discover new ways to unlock the value of this data.”

Which seems to be where WideTag, mentioned above in the splime discussion, comes in: a startup focused on an infrastructure for collecting and analyzing the river of data that’s expect to flow from all the IoT: “WideSpime enables the rapid and scalable development of dependable solutions based on Social Hardware and services. With the addition of WideSpime’s rich set of functionalities, your application’s adoption rate will soar!” (!)

+++

In this IoT space, an underlying theme of environmental action and responsibility is often implied or explicitly called out. For instance, WideTag’s tagline is “Realtime. Social. Green”; while I couldn’t find an explicit explanation on their site, I gather that their take is that “green technologies are going to be an exceptionally important application of widespread, bottom-up, environmental sensor technology” that is implied by an IoT.

That makes sense; if we can follow river levels via Twitter today, then tomorrow, via small wireless devices, could we be following Tweeting salmon (“Hey, who put that damn dam there??”) or glaciers (“Is it me or is it getting warmer around here?”) or ocean currents (“C’mon in! It’s a balmy 38 F!”).

(OK, silly, but you get the idea.)

On the other hand, it could be that the IoT is an intrinsically non-green activity. IBM’s SmarterPlanet initiative apparently projects that there will be 30 billion RFID tags extant at some point. Whether you believe that number or not, that’s a lot of ‘things’ being created and probably not recycled when we’re done with them. I wonder if RFIDs are “RoHS compliant” in the first place… are they even designed to be recycled?

And RFIDs are very simple devices that don’t include batteries and circuit boards made of exotic and hard-to-recover materials, as you’d expect with ‘smarter’ devices. So an aspect of the ‘green’ in IoT may be a proactive reflex to stay ahead of curve on the environmental footprint of the IoT. Note that in the “splime” definition, above, one metric or requirement was: ‘“Cradle-to-cradle” life-spans for objects. Cheap, effective recycling.’

IBM highlights a random list of case studies in the “Sustainability” section of their SmarterPlanet initiative… but it feels like they needed to fill in a marketing check-box.

I tried not to be cynical when I read what the folks running the Internetome conference had to say: “ what’s good for your organisation may well be good for the planet too.”

+++

It’s been interesting learning more about IoT. I’m sure there will be more to write on in future posts. My guess is that my near-term interest area will be on ‘bespoke’ objects that are designed and built to function as 2-way connected devices or ‘Bots in the first place.

I will close with this thought (and just a couple of postscripts!): I think Adrianne Jeffries gets it right when she observes this:

IoT “got to be an overused misnomer even before the technology had a chance to become common”.

You think?

+++

Postscripts:

- I have to admit that when I run across IBM “Smarter Planet” ads in magazines, etc, my eyes glaze over instantly, rendering me incapable of understanding exactly what they’re selling (which is really what it’s about). Similarly, their pithy taglines tend to leave me a little bit dumber every time I take them in:

-

- “Intelligence – not Intuition – drives innovation”… I really don’t know what that means, and if I did, I’m sure I wouldn’t agree with it. Would Edison have agreed with it? I think IBM’s point is that the average enterprise or organization needs to be “data-driven” in its decisions and planning, which requires the ability to analyze and view the data from many angles: “The most important aspect of smarter systems is data—and, more specifically, the actionable insights that the data can reveal.”

- “The planet has grown a central nervous system”: Has it, really? Where’s the “brain”, then? I thought the internet was distributed and decentralized? Are we talking about Skynet here? What do they mean??

- “Welcome to the Decade of Smart”. I guess “Decade of Smarter” sounded clunky. And do they know about Diesel’s new ad campaign?

- I just realized that it appears that it’s the EU that’s apparently taking the lead in all of these IoT discussions. Did you notice all those “organisations”? Should I rashly leap to any conclusions based on this? Whatever it is, WideTag has decided to export it: “WideTag, Inc. has been founded by a team of experienced entrepreneurs who, having lived in Europe, Italy, are mashing-up the Silicon Valley’s startup culture, with Europe’s strong values, social responsibility, and design driven life.”

- There’s a tangentially-related conference, “Fifth International Conference on Tangible, Embedded, and Embodied Interaction”, which seems to focus more on interactions with devices, etc: “TEI is the premier venue for cutting edge research on interaction with tangible artefacts and systems. We invite submissions of prototypes and daring ideas, tools and technologies, methods and models, as well as interactive art, interaction design, and user experience that contribute new understandings to the broad area of tangible computing, embodied interaction, interactive surfaces and embedded interactive systems.”

- Even farther afield, and just because it sounds interesting, there’s also the “Smart Fabrics 2011” event: “The conference will cover topics such as the current status of innovative smart fabric technologies in the marketplace, as well as recent application breakthroughs and adoption. The conference will be of particular interest for people involved in electronics, textiles, medical, sporting equipment, fashion, and wireless communication industries, as well as military/space agencies and the investment community.”

- On my IoT to-do list: Watch O’Reilly’s keynote on this topic. Get some of my own devices to show up on pachube.

What, no Moore’s Law for Batteries??

In a previous post, I wondered aloud:

“The implication of Moore’s law, along with implicit corollaries for energy storage technologies (batteries, capacitors, etc) – is there a law yet in Wikipedia for this??”

Turns out, there’s been some buzz about this question recently. As pointed out by Gigaom, Thomas Friedman, in an otherwise excellent piece in the New York Times (September 25, 2010) on the need for the United States to ‘drive’ an electric car program as aggressively as it did its own Moon Shot program in the 1960s, repeated an assertion that there is indeed a kind of Moore’s Law already in effect for batteries: “the cost per mile of the electric car battery will be cut in half every 18 months.” Gigaom correctly pointed out that there is no such “law” currently in effect.

Techies have been held in awe of Moore’s Law and its consistent returns for so long that it’s natural for them (us) to assume that every other hard challenge of science and technology would eventually be tamed and mastered in a similar manner. So far, though, battery technology appears to be immune to this romantic notion. According to Bill Gates, “There are deep physical limits” (also reported by Gigaom) when it comes to batteries.

Perhaps Moore’s Law is really an “assertion”. As originally stated, it referred to circuit density (the “number of transistors”), but some have shown that circuit performance has actually hewed the line as well, also doubling every 18 months. The difference between density and performance is significant, even though as things get more complicated, ‘performance’ may be hard to universally measure. As circuits have shrunk (as densities have increased), clock rates have also increased: more transistors to do more work per clock cycle, leveraged by more available cycles per second.

Why does Moore’s Law apply only here (and not to, say, battery capacity)? Is it because increasing transistor density “just” comes down to figuring out how to print circuit patterns onto silicon using increasingly shorter wavelengths of light, while “simply” managing to deal with the various weird physical effects that come into play when you’re working at nanometer scale, while designing the circuits for testability and robustness, etc? Can any of the physical techniques be applied to battery technology, to increase surface area, etc? I’d better stop here since I’m just guessing about this🙂.

Note that in the Friedman article, the metric was not battery capacity, volume, weight, or energy density but “cost per mile of the electric car battery. That’s surely an important metric to focus on now and not a bad place to start; my guess is that getting the un-subsidized cost of a 100 mile range electric car to drop below, say, $20k, would be an interesting milestone. The Nissan Leaf, an all-battery vehicle with a 100 mile range, carries an MSRP of nearly $33k; after subsidies, Nissan estimates that the take-home price starts at $25k. At some point however, longer range will also become a distinguishing factor, and so battery energy density will become an important metric to track.

But at a higher level, the real metric is about the cost, density, or capacity of “energy storage”… there are other ways, besides batteries, for storing energy, such as: capacitors, flywheels, fuel cells, compressed air (yup), and spit (kidding!).

What does this have to do with ‘Bots? A lot. As energy storage technology (admittedly, mostly in the form of batteries) improves, resulting in smaller batteries that pack more of a punch (in terms of total energy stored and/or in terms of amount of energy that can be delivered in a given time period, or “power”), and/or can store energy for longer, then the set of scenarios you can envision with a self-contained connected device gets that much richer. Couple that trend with CPUs that can do more with less power, and with sophisticated ‘sleep’ modes, and you get even more leverage. Batteries that last 10 years are now commonplace… imagine a self-contained wireless device packing a battery that can power it, for, say 20 or 30 years… you’d start to think differently about the scenarios it would enable. You could, for instance, build them into semi-permanent structures: boot ‘em and ‘forget ‘em’… for security, maintenance, building health, and other kinds of monitoring (such as managing wilderness areas, tracking geologic events, and so on).

Those kinds of scenarios imply interesting requirements for the software that would power those devices, and the systems that would manage and monitor them. Unless you’re a programmer for a Mars rover, accustomed to not being able to reach over and hit the reset button at a moment’s notice, my guess is that achieving a world where devices go for 30 productive years possibly untouched by a human may take some work. It’s an interesting problem.

PS: Not to mention the environmental issues associated with the production of so many batteries and the challenge of recycling them when their useful life is over, or related health issues – have you ever seen a ‘leaking’ dry cell and the damage done to its immediate environment? (Added 6 October 2010:) And then there are the social / policy / privacy issues. More on that later.

PS: Watch any of the videos on this site (this one is my favorite) and ponder the technology advances that have made this form of ‘connected device’ commonplace, and a platform for frenzied experimentation and innovation: small, cheap, lightweight, powerful batteries, sensors (accelerometers, gyros, pressure sensors), cameras, compute devices, motors and associated electronic controllers, servos, GPS modules… all integrated and leveraged by sophisticated software.

‘Bots in the Basement

It’s one thing to write about this stuff, but it’s another thing entirely to get hands-on with it. Since I’m after some credibility here🙂, this post is about some of my own connected experiences, starting in the Home Automation space, in which I started dabbling around 2001.

I try to take a “scenarios-oriented” approach when messing with the house, given that its other occupants may not have a high degree of tolerance for things that don’t work as expected. It’s a goal that any new scenario adds value (in terms of safety, security, energy conservation, comfort, convenience, etc) in a seamless and reliable manner. Sure, sure, sounds good, right?

Here’s a list of scenarios implemented to date:

Lighting

- Turn off lights if rooms aren’t occupied, or after a certain amount of time

- If security system is armed, randomly cycle lights to simulate occupancy

Fans

- Turn off bathroom fans and Kitchen exhaust fan after 10 minutes

- Turn on bathroom fans if someone is taking a shower, run for 10 minutes after shower ends (this one took a while to pull off, but now it seems perfectly commonplace)

- Turn on Kitchen fan if high temperature detected over the stovetop

- Periodically, run fan in the cats’ litter box area

HVAC

- Set temperature back in all zones when no one is home (that is, when the security system is armed) and return to normal schedule when someone comes home (security system is disarmed)

- Periodically, set correct time on thermostats

- Periodically, run the hot water recirculation pump

Security

- Send email notice when security system is armed, disarmed, or when there’s an actually alarm

- Notify if garage door is left open, provide option for remotely opening/closing it.

- When someone comes home (and disarms the security system), turn on certain lights for convenience

- When security system is armed, randomly turn on/off certain lights at night to simulate occupancy

Irrigation

- Run sprinkers on regular schedule (depending recent rainfall, as detected by local weather station)

A/V

- In main zone (living room): If DVD is turned on/off, also turn on/off receiver and monitor, and set receiver source accordingly, for main and 2nd zones. Ditto XBox

- In main zone (living room): If volume up/down is used on any remote, adjust receiver volume

- In Kitchen zone: if kitchen radio is turned on/off, also turn on receiver zone #2 and set source. Monitor volume keypad for key presses, and adjust zone volume accordingly.

Reminders, Warnings, and Notices

- Send email note reminding to take out Trash and Compost bins. Scrape city’s web site to also determine if it’s a Recycling day

- Send nightly reminder note to close apps for better backups

- If the security system is armed at 10pm at night, send an summary note summarizing the status of the automation system

- If temperature in computer rack or over kitchen stove top gets too high, warn

- Send note if temperate has dropped to near-freezing

These scenarios are implemented via these components:

Homeseer Home Automation server (http://homeseer.com): software installed on a small headless box running 7×24 in the basement. Presently, this represents the main controller and (browser-based) user interface for all home automation scenarios.

6Bit TCP/IP-controlled relay and input board (http://www.6bit.com – appears defunct at this time): I can telnet into this board and command any of its 6 relays to open/close or sense when any of 12 inputs go low. Can also download simple macros, such as: “close relay 1 and open in 10 seconds”. This board is used to: sense when the garage door is open (I mounted a switch on the door opener, a bit of a story in itself), and to sense when certain devices turn on (such as the DVD player, Xbox, or kitchen radio) or when buttons are pushed (such as the volume up/down buttons on the kitchen keypad), or when the security system signals an “armed” / “unarmed”, “normal” or “alarm”, or “motion in zone x”. The relays on the board are used to: close the garage door and energize any of the three sprinkler valves. Homeseer and the 6Bit box communicate with each other via a virtual COM port. The 6bit box also offers an HTTP service, so you can use a browser to configure it, etc. It uses a Lantronix XPort device (http://www.lantronix.com/device-networking/embedded-device-servers/?tab=0) to connect the board/sensor logic to the LAN. It’s too bad that this company appears to be defunct/zombied, as the board is well-designed and constructed. My guess is that if I had to replace it, I’d go the Arduino route.

Ha7NET 1-Wire Ethernet Host Adapter (http://www.embeddeddatasystems.com/HA7Net-Ethernet-1-Wire-Host-Adapter_p_22.html): You plug this small box into your LAN. You also plug into it your “1-Wire” networks. “1-Wire” really is “3-Wires”, but that’s OK, it’s only Marketing. 1-Wire devices are small, cheap, nominally intelligent devices. I use the DS18S20 temperature sensor, which looks like a small transistor and costs < $5. You can plop any number of these devices onto the same run of 3 wires: Signal, Ground, Power. Each device has its own unique ID, and implements a basic protocol for manipulating the 1-Wire bus in order to communicate with the host adapter. I have three little networks of these devices in my house (due to topology requires of the 1-Wire bus), which all terminate at the host adapter. On the Homeseer controller application, there’s a plug-in (from Ultra1Wire) that knows how to find and communicate with the Ha7NET box to get regular (every couple of minutes) updates from the sensors. The Ha7NET box also offers an HTTP service that you can hit with a browser to configure the device, get an inventory of devices on the 1-Wire net, etc. Prior to the Ha7NET board, I used a Midon Design “Temp 08” board (http://midondesign.com/TEMP08/TEMP08.html), that connected to the Homeseer app via serial port. But I wanted to move away from serial-based boards…

Davis Scientific Weather Station (http://www.davisnet.com/weather/products/vantagepro.asp): with a TCP/IP-enabled console. The console communicates with the Davis Scientific “http://WeatherLink.com” web site on a regular basis, sending up weather stats like temperature, wind, etc. The site also sends that info over to any of several popular weather sites such as http://weatherunderground.com. I wrote Homeseer scripts that regularly pull down relevant stats – such as recent rainfall – to guide the sprinkler schedule. So, it’s a bit roundabout - weather bytes travel from the weather station on my roof, to the console in our kitchen, and there to the WeatherLink site, and then over to WeatherUnderground, and then back down to the Homeseer app running on a PC in my basement. Presumably they’re out of breath when they arrive. An interesting bit of system engineering, but it’s not always reliable (more on that later).

Z-Wave light switches and modules (http://www.z-wave.com/modules/ZwaveStart): Z-Wave is a specification / industry consortium for RF-based switches and controllers that enable remote control and sensing in the house. I have about 10 Z-Wave light switches installed throughout the house, and a Homeseer-branded Z-Wave controller connected to the Homeseer PC via serial port. As a result, Homeseer knows when someone has turned on a light in, say, the family room, and can be scripted to automatically turn if off after, say, 20 minutes. The Z-Wave light switches look mostly like regular Decora-style light switches, with some quirks and other characteristics. Z-Wave devices communicate via an RF mesh… each device spends some time getting to know its neighbors, forming primary and secondary paths to them based on signal strength. When a device wants to communicate to the controller (connected to the PC), it asks its neighbors to route the message along, via the mesh. The advantage in this approach is that the mesh can dynamically deal with sudden obstructions that might block a primary path, such as if a refrigerator door (a large metal object) is opened, or a car pulls into the garage, or permanent obstructions; in my house, one or two of the Z-Wave devices have a clear (RF) view to the controller, as there are all sorts of heating ducts in the way (ever see the movie “Brazil“??). So the mesh concept comes in handy, but it does have its downsides, in terms of complexity, reliability, and ability to debug, which I’ll relate in a later post. But it’s a heck of a lot better than “X10” (http://www.x10.com), an earlier approach (based on sending signals over the AC powerline) to control and sensing in the home. (Some of you may remember how, in the early days of the web and pop-up ads, it seemed that every other ad was for X10…)

Proliphix web-enabled thermostats (http://proliphix.com): Proliphix offers a line of high-quality thermostats that just happen to sport web interfaces and an API. So, you can hit the home page for one of these thermostats and see the current temperature in that room, the current heat/cool setpoints, the setback schedule, and so on. We have three zones in our little house (one for each of the basement, first floor, and second floor), and there’s a plug-in for Homeseer that surfaces key metrics and commands, so that it’s easy to, say, write a script that sets the heat setpoint back if no one’s in the house.

Security System: I’ve installed a mid-level security system, which, until recently, really didn’t want to play well with the other systems. I managed to arrange its typically archaic programming of zones and sensors so that it closes relays for certain conditions (system armed/disarmed, alarm, activity in certain zones), which are connected to the 6bit board’s input sensors, which Homeseer can track. The end-result is that Homeseer knows about certain security system events, and I can script scenarios such as this: if the security system is armed, set back the Proliphix thermostats to save on heating costs.

USB-UIRT IR receiver/transmitter PC interface (http://www.usbuirt.com): this is a small box that connects via USB to the PC running the Homeseer control application, and is apparently manufactured by a guy in a garage🙂. Homeseer can sense (via a plug-in) when certain IR signals have been received (after training) or transmit IR signals (after training). The result is that I can write scripts like this: “if the volume up button is pressed on the Xbox remote control, then send a signal to the Denon receiver to increment the volume”.

Other elements

I have a Denon receiver with an RS-232 port on the back, which enables communication with a PC or controller. The receiver is connected via serial cable (straight-through! straight-through!!) to the PC running Homeseer. I’ve written scripts for Homeseer which can read responses from, and write commands to, the receiver. So, for instance, someone changes the volume on the receiver – either directly, by turning the knob, or via a remote – the receiver dutifully outputs some characters through the serial port, and my script running on Homeseer can track the changes (updating Homeseer variables which represent the volume level). Similarly, I can, via script, programmatically change the volume on the receiver. Or, I can script Homeseer so that if the DVD player is turned on, the receiver is turned on and its input source set to “DVD”. There’s a similar arrangement with the monitor in the living room. I’ll post more details on how the A/V system is scripted, as I think the resulting level of simplicity it affords to the end-user (no more confusion about remotes, and no more need for universal remotes) is worth sharing.

Some additional, quick notes

- I’ve tried to move away from serial-based connections (RS-232, USB, etc) and towards TCP/IP, since the resulting simplicity and flexibility (in topology, distance, etc) is worthwhile. The 6bit relay/sensor board, Ha7NET 1-Wire host adapter, and the Proliphix thermostats listed above all communicate via Ethernet. However, I found it much easier to program, and reliable, to stick with the antique RS-232 interface for integrating with the A/V components (receiver, monitor, IR controller). More on this later.

- I have this hang-up/obsession around the importance of instant and relevant feedback when dealing with the humans in the house (at least, when it comes to the home automation🙂. If someone presses a button and nothing appears to happen quickly – even if, under the covers, millions of compute cycles are cycled, thousands and thousands of disk seeks are commanded, and umpteen packets are zipped around LAN and WAN, all in service of that human’s simple action – that stinks. The human is left wondering… did I do something wrong? Or did this stupid system fail again? More on this later.

- Another hang-up/obsession: I don’t have (much) patience for systems that fail, especially those that fail silently. I’ll go out of my way – in the form of extra coding, hardware, wiring, etc – to ensure that systems are built to be reliable, and that if they do fail, they don’t fail silently. More on this later.

All together, I think this loose constellation of systems and components, held together with lots of Homeseer scripting, does add value in a mostly seamless or even invisible way. There’s lots of room for improvement (for instance, extended power failures can be tough to recover from…). And if viewed from the perspective of, “Is this ready for the mainstream??”, the answer is a clear “No!”. More on this later.🙂

‘Bot Trends, or, “How Did We Get Here?”

So, what did a ‘bot geek do for fun hundreds of years ago?

My guess is that they hacked around with clocks, especially if the money was good. The first accurate, portable timekeeping devices were made possible through extreme cleverness and a willingness to consider alternate perspective on the part of their inventors coupled with advances in metallurgy and other technologies.

It’s interesting that those first watches were rather small; Harrison‘s “H5”, which, like its predecessors H1 through H4, took years for him to construct (early 1770s), was designed to fit in one’s pocket, and was accurate to one-third of a second a day… which is likely more accurate than today’s average cheap wristwatch.

Fast forward a couple hundred years, and we’re faced with the spectacle of the “Spot Watch” , which sports a CPU, memory, display, and an radio that receives data over an FM sub-carrier. Via the 1-way data link, it can keep its internal clock synced with that of the larger cosmos, but more interestingly (to the geek), applications can be downloaded to it for local execution. All in a package that fits on your wrist.

You may ask yourself, How did we get here? Well, as you might expect, Moore’s Law, plays the leading role on many fronts; it’s also why the phone in your pocket likely has more compute and memory power than that of several Apollo launch vehicles combined.

All this is well and good… it’s been fun riding the wave of shrinking-but-more-powerful computing devices.

But what I think is especially interesting now is that additional trends have coupled into Moore’s Law in a sort of Geek Perfect Storm, opening up what feels like a whole new frontier for those who play with ‘bots. Building on the foundation of Moore’s Law, we have:

- New capabilities in the form of sensors, displays, GPS functions, and sophisticated 2-way wireless systems are entering the hacker mainstream at lower and lower price points, and continuing to drop in price from there. For instance, you can buy a hobbyist-friendly GPS receiver for $20, a multiple-axis accelerometer or RFID receiver for $25, a color display similar what you’d find on a feature phone for $15, a basic wireless transmit/receive set for $10, or a sophisticated wireless mesh network (based on the ZigBee specification) starting at $25 a node. So, for under $100, you could put together an interesting gadget, perhaps controlled by a $20 Ardruino compute module. (Prices pulled from here.)

- High levels of chip/function integration that has driven costs down for the average consumer ($29 DVD player anyone?) have also benefited the ‘bot geek. For instance, you can buy a module that looks like an RJ-45 socket for your ethernet cable, but it just so happens to implement a TCP/IP stack and throws in a general-purpose Linux operating system environment for your apps for good measure. Or, there’s the 3.2″ color touchscreen which includes a general-purpose computing environment, a number of I/O pins, a speaker, an SD card slot, and the ability to read FAT files… for $80, and in a package not much bigger than your computer mouse.

- The Rise of The Maker: “Maker”, a term popularized by O’Reilly’s “Make” magazine (not to mention Danial Lanois), describes a philosophy (and perhaps a cultural belief system) wherein a high value is placed on the practice of taking things apart to learn how they work, how to make them better, how to re-purpose them to serve new ends or just for fun, and keep them out of landfills in the process. There have always been “Makers” - I remember some old guys in the neighborhood where I grew up who would scavenge radios and TVs from the curb on trash day. But while Moore’s Law brought prices down and complexity up, it also meant that when you tear into your PC’s dead DVD burner… there’s practically nothing inside for you to mess with. It’s not very satisfying… unless, of course, you pry off that laser and find a way to burn stuff with it. Makers assert that manufacturers should design their stuff to be more open, to encourage repair and hacking. At some point, this same crowd gets around to building new stuff, perhaps atop the old stuff, and turns to the kind of cheap hardware and easy software integration mentioned above to pull off their exploits.

- The Role of Open Source and Community: Software developers are familiar with the value of “Open Source” software and the associated communities of developers. If you’re able to build new software by leveraging existing, debugged, and community-supported modules, the overall velocity of your project increases, as well as the value of the community if you donate it back. Well, the concept works with hardware also. Example: in the hacker space, there’s the Arduino, “an open-source electronics prototyping platform”. Multiple vendors offer Ardruino-spec’d compute modules with standard connectors and add-ons (“shields”). This helps sustain a positive feedback loop (sometimes assisted by “Hacker Spaces”, such as “NYC Resistor“, or events such as “Maker Faire“) in the form of a fervent community who are happy to help newcomers bootstrap their own crazy ideas, and vendors who sometimes adopt them into new products of their own, or at least support interoperability (surprising example here). The resulting leverage is amazing; you’d be surprised by what you can throw together in a weekend. The ultimate expression of this is an open source ‘bot that can “print” in three dimensions, and can thus make arbitrary things (potentially even all of the parts necessary to make a copy of itself). Such devices - such as the RepRap or the MakerBot - can be driven by open source designs found in community-driven libraries such as ThingVerse.

- The Magic of (high-level) Software: No more assembler! For instance, the Ardruino project includes a sophisticated software development environment and run-time libraries. If you’re a beginner gadget Geek, you’re going to move a lot faster if you can write in a high-level language similar to one that you probably already know, and leverage run-time libraries that abstract away the details of interfacing with the hardware.

We might best identify the Ham Radio operators from a generation ago as ancestors to today’s Geeks / Makers (and perhaps these guys, also). Ham Radio operators had a strong sense of community which encouraged the sharing of designs for rigs, antennas, and exploits (extreme distance, lowest power, video, etc). They used their own medium - Ham Radio - as the basis for their community. It was probably a lot of fun to communicate with someone on the other side of the world using a radio you build yourself.

Similarly, today’s connected devices seek to be part of the internet, which is the largest (and most chaotic and distributed) device in its own right. The internet is also the foundation for the very active device community… so, as with Ham Radio, the medium and the community are the same. Perhaps more so, since today a connected device can host its own web site and potentially actively in the community that created it.

The upshot of all this is that it’s a great time to be thinking about connected devices, for fun and profit.

What’s a ‘bot, anyway?

Well, “I know one when I see it“. More helpfully, I’d say that a ‘bot is a widget, gadget, or device that is ‘connected’ to the outside world and can say something about its own state, or respond to commands. Thus, the difference between, say, a nutcracker (a gadget) and an iPod Touch (another gadget) is that the latter can run code and communicate with, say, a web site.

A PC is a ‘bot, but the focus here is on special-purpose or single-purpose smart devices that can run code and communicate to the outside world. Examples include: smart thermostats, your car (in the not-too-distant future), and MER-A and MER-B, much better known Spirit and Opportunity.

Gadgets are much more interesting when they’re connected to each other and, perhaps, to occasionally-controlling computers,perhaps because of Metcalfe’s law, whether in your house, car, or backpack.

The implication of Moore’s law, along with implicit corollaries for energy storage technologies (batteries, capacitors, etc) - is there a law yet in Wikipedia for this?? - is that we’ll be seeing more ‘bots around us, doing more on our behalf, at greater price efficiencies. In some cases, if these things are designed and deployed well, they’ll actually simplify things for their human overlords and may even seem to be performing magic on our behalf. Of course, given that they’re just so much hardware and software designed by those same humans, there’s a fair change they might not actually help things at all, either.

These are the topics I hope to explore a bit in these posts, with a mixture of examples from my own experiences and with any luck some high-level musings about what could be.

(6 October, 2010: updated for spelling)